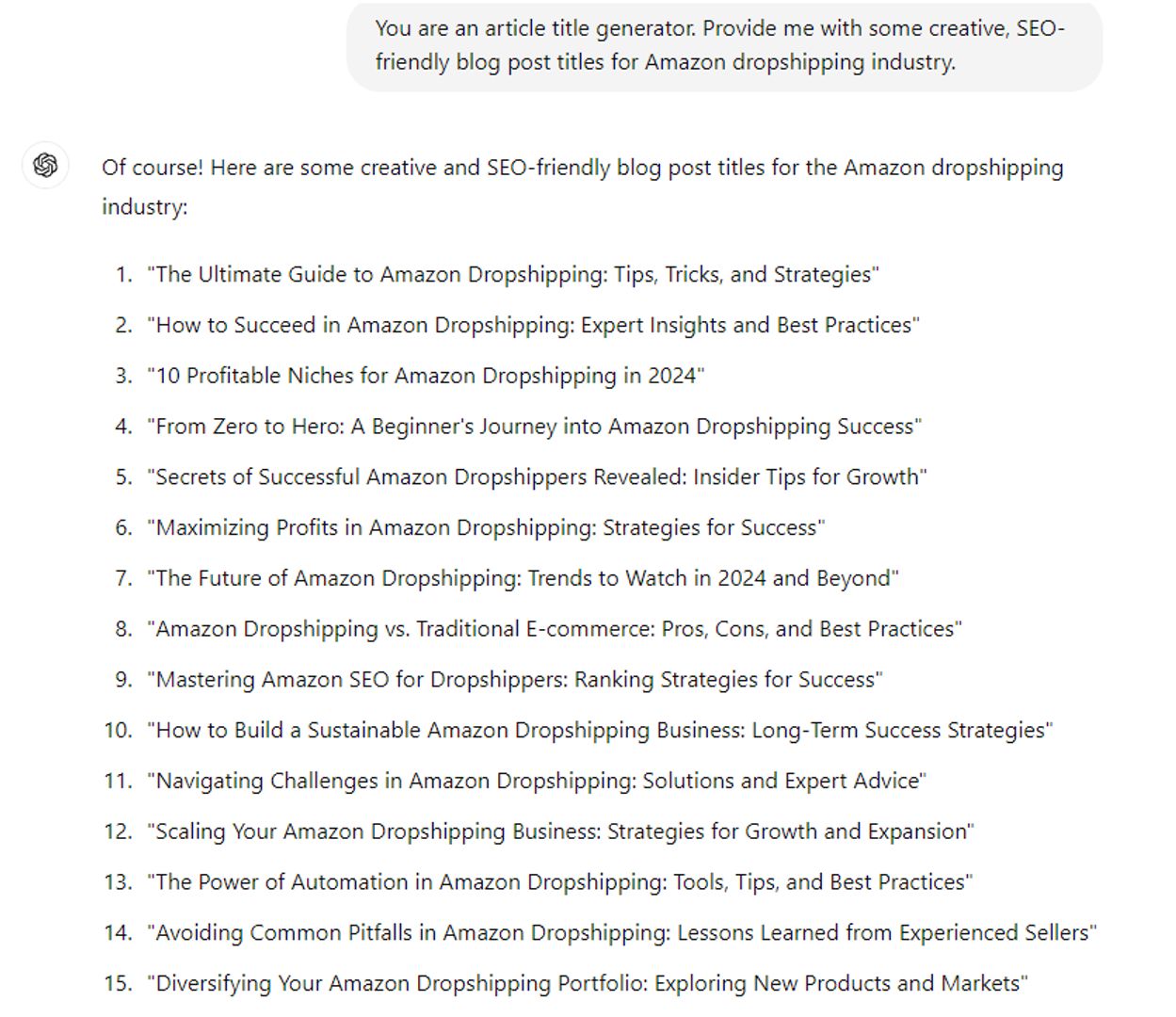

How ChatGPT Code Interpreter Is Killing Data Analysts Jobs: Pros and Cons of Automation

According to Glassdoor, the average data analyst in the US makes around $65,000 per year. This indicates that the role requires high-level skills.

With the continuous development of artificial intelligence, there is a growing concern that even traditional professions such as data analysis may become automated. The introduction of OpenAI's Code Interpreter Plugin (CI) marks a significant achievement in process automation. And today, we will show you how anyone can automate complex tasks without coding skills.

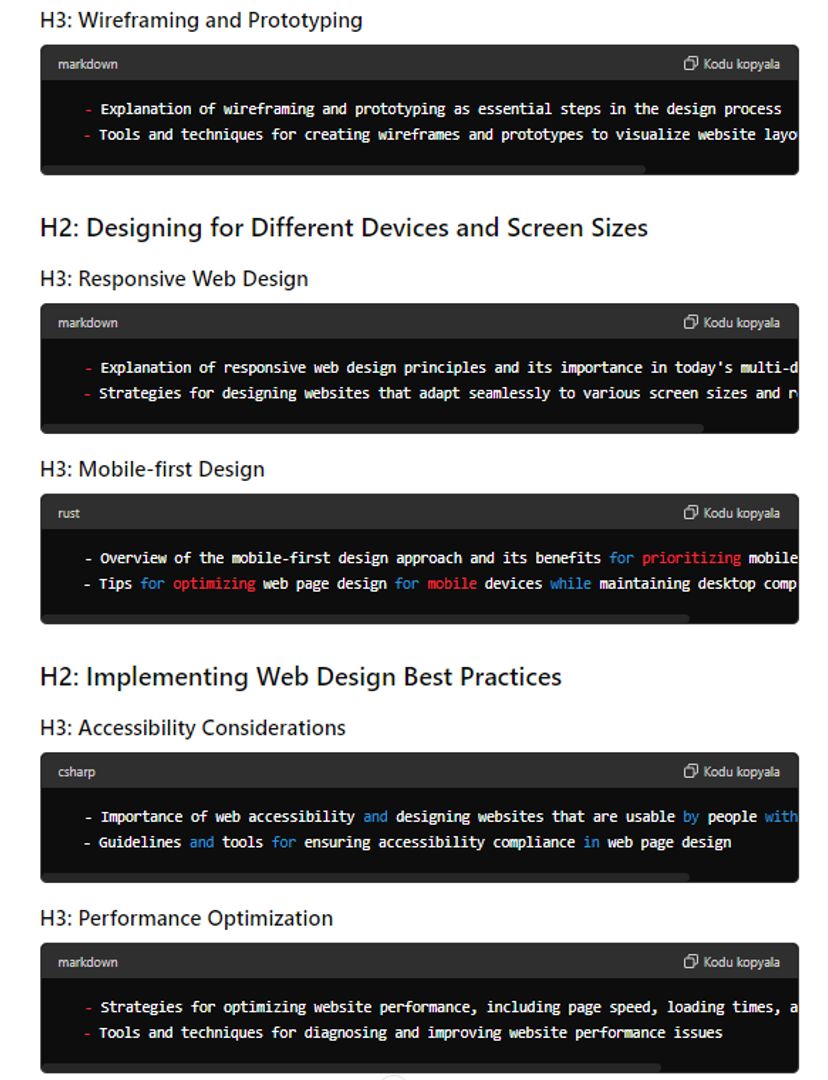

What is Code Interpreter

In short, CI is a ChatGPT plugin with lots of features. It can analyze data, generate charts, solve complex mathematical equations, process images, and perform code editing tasks. All this can be done through a text-based interface using simple prompts. This plugin is a great example of how AI technology can take over tasks traditionally reserved for skilled professionals.

What you can do with Code Interpreter

The main advantage of CI is that you can upload and download files to ChatGPT, which was not possible before. You can use various file types, even images or videos, which opens up opportunities for using computer vision. You can also use JSON and CSV to train ChatGPT on custom code more efficiently. Another remarkable feature of Code Interpreter is that it can learn from its output and correct its mistakes. By asking ChatGPT to adjust its output and fix errors over several iterations, you can not only improve the result but also understand code execution by yourself.

How CI can transform data analysis

As you may have already understood, Code Interpreter changes the traditional approach to data analysis. With CI, you can perform advanced statistical analyses and create visualizations using natural, conversational language commands just like you would when talking to a skilled technical assistant, who "translates" your tasks to technical terms. It brings new possibilities to people who may not have an IT background. Indeed, we tried to solve a couple of computer vision tasks and achieved impressive results despite only using the English language to communicate with the AI.

But let's start from the beginning - what is computer vision? It is a field of artificial intelligence where people teach computers to interpret images and videos and make recommendations or take actions based on this data. So, our first experiment involved face detection, a crucial task in computer vision.

I. Face detection

We used a classic Haar cascade classifier. In short, it is an algorithm that can detect objects in images, irrespective of their scale in image and location. It is a reliable but not perfect method with some limitations. Moreover, it can occasionally produce false positives.

But here, I must say that the way the Code Interpreter solved false positives was impressive! Whenever we faced such issues, we tried to provide detailed prompts explaining what happened and why. Surprisingly, Code Interpreter fixed every false positive with just one explanation! Usually, with a human, we need at least several iterations of bug fixing to get face recognition working. It is tough work!

II. Object detection, tracking, and counting

Performing tasks such as object detection and recognition are crucial in many computer vision applications. Typically, such tasks require access to YOLO, an algorithm that takes an image and then uses a deep neural network to detect objects in the image. But since YOLO is too advanced for now, we decided to work with the colors of the object to recognize it instead. As a result, we got a more accurate and clean object detection process:

Moreover, we tested object tracking on a video with moving objects, and it worked superbly! We achieved the desired functionality by simply using the prompt "track objects in the video". Compared to the conventional object tracking methods like YOLO, it looks really easy:

There was one major problem the attentive reader might have noticed in the illustration above - counting. It required some extra effort and messages to ChatGPT to refine existing requirements. Although we won't discuss it here, as it warrants a separate blog post, we can confirm that we finally managed to perform our task completely. Our model could detect, track, and count objects according to predetermined requirements.

III. Text extraction

The next task in our experiments involved Optical Character Recognition (OCR). This process converts an image of text into a machine-readable text format:

ЛИНК

First, we extract the text using Tesseract, an open-source recognition engine that CI can use. Then, we feed it to ChatGPT, which helps to structure it, making it easy to comprehend:

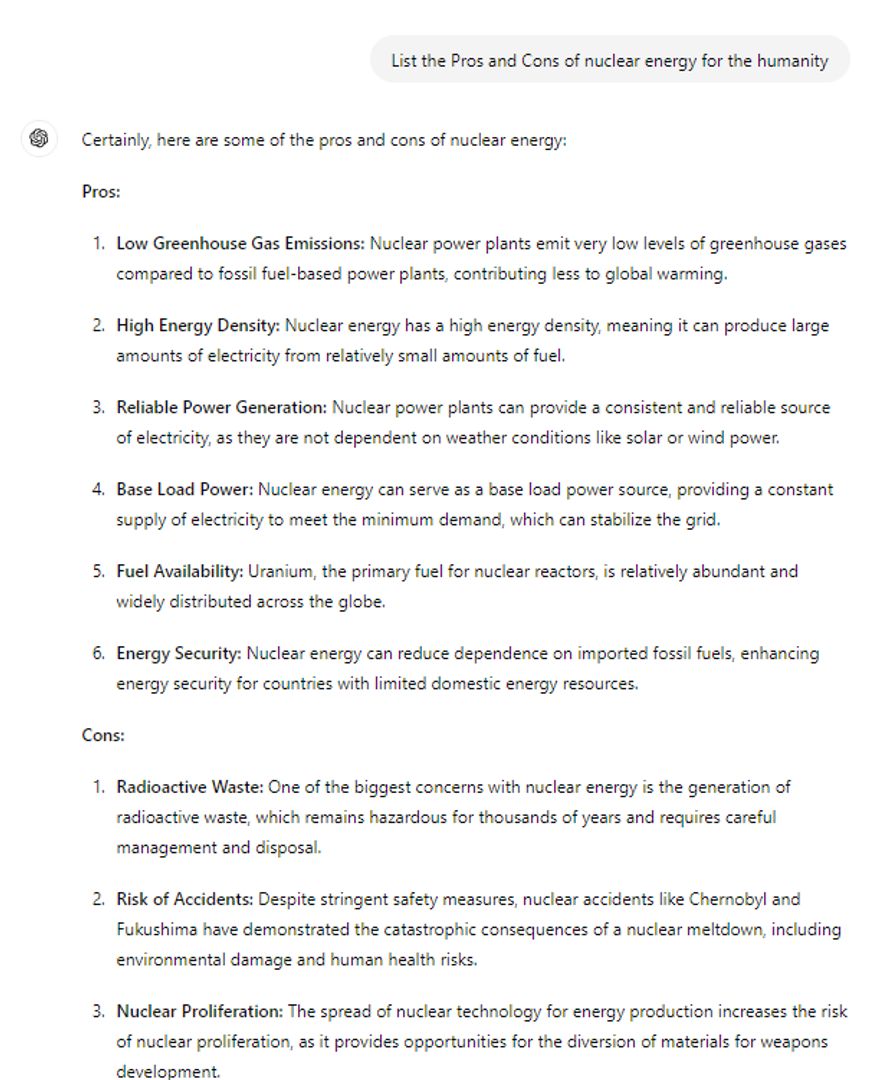

Restrictions

If you've used ChatGPT before, you might know it is gradually becoming censored. This means you cannot use unethical or violent prompts or ask to create something illegal. However, there are always ways to bypass this by using prompts that convince ChatGPT to follow your commands rather than its pre-defined codes. Eventually, this may seem to be more about psychology and social engineering than technology itself. But anyway, the same applies to Code Interpreter. The environment's limitations prevent advanced computer vision techniques from being used. For example, you can not execute modern computer vision models or install external libraries.

As with text prompts, these restrictions are pre-defined prompts built-in ChatGPT or something like this. It means that, technically, you can convince the system to break the rules and do what we need.

We've tried different prompts and finally managed to install external libraries and run the Ultralytics YOLO model. It helps to create accurate object detection models:

Useful tips for working with Code Interpreter

During our experiments, we managed to highlight the following rules for working with CI:

- Pay attention to variables and make sure that CI defines them. Over a few iterations, they disappear.

- Use prompts preventing CI from guiding you or providing vast explanations. It is important because the context window is limited and will end soon.

- The same as variables, the files also disappear after a few iterations. Even ChatGPT does not know about this and continues to work as if they are still there. So, if you use files, ensure they exist in the environment after a few iterations.

Vulnerabilities: understanding prompt injection

As with any technological advancement, there is a potential downside - vulnerabilities. One such emerging vulnerability is prompt injection. Similar to SQL injections, prompt injection allows a malicious user to inject harmful instructions into the prompt to execute a command or extract data. In certain circumstances, this can lead to exposure of sensitive data.

Similar to how we previously installed unauthorized packages and ran computer vision models in Code Interpreter, we will demonstrate vision prompt injection and how to defend against it.

So, what is vision prompt injection?

As recently announced, the latest GPT-4 model can now process images. For example, you can ask questions about pictures or ask to read the text on them. However, this development also poses a threat, as it is now possible to inject malicious prompts through images instead of text:

The same as in our example when we bypassed built-in instructions made by the creators of ChatGPT, now somebody can override your text directives and use a prompt on the image to bypass your instructions. And what is worse, the text on the image can be invisible. It can be easily achieved by using a color that is almost identical to the background. Although it makes the text invisible to the human eye, it remains visible and extractable for OCR software (which, as we already know, perfectly works in ChatGPT). Therefore, it leaves it vulnerable to this kind of attack.

Data stealing from GPT history

Now, we will show how an attacker can steal your prompt history, and you don't even need to click any links.

While there is almost no way to communicate with the Internet from a chat window, there are still ways to generate clickable links and retrieve processing results.

This example contains a URL in the image and some instructions. The link is clicked automatically and data is sent via HTTP request:

Unfortunately, building a proper defense against this attack is difficult because we need to teach ChatGPT about good and bad prompts, a complex task, as we have seen.

Vision prompt injection is a new challenge that is hard to solve because GPT-4 Vision isn't open-source, and we do not know how text and visual inputs interact with one another.

Instead of conclusion

For now, there is little we can do about this issue apart from staying well-informed and always keeping it in mind while working on large language model products. The good news is that OpenAI and other IT giants are aware of this and developing safeguards. So, we're in good hands!

Anyway, the Code Interpreter plugin is a powerful tool that simplifies computer vision tasks and saves time for IT specialists.

Finally, Code Interpreter has the potential to revolutionize the field of computer vision despite its constraints and vulnerabilities. As we continue to advance in AI, tools like Code Interpreter will lead us to significant breakthroughs!